Metamers

Metamers are an old concept in the study of perception, dating back to the color-matching experiments in the 18th century that first provided support for the existence of three cone types (though it would be another two hundred years before anatomical evidence was found). These color-matching evidences demonstrated that, by combining three colored lights in different proportions, you could generate a color that humans perceived as identical to any other color, even though their physical spectra were different. Perceptual metamers, then, refer to two images that are physically different but perceived as identical.

For the purposes of plenoptic, wherever we say “metamers”, we mean “model metamers”: images that are physically different but have identical representation for a given model, i.e., that the model “perceives” as identical. Like all synthesis methods, it is model-specific, and one potential experiment is to determine if model metamers can serve as human percpetual metamers, which provides support for the model as an accurate representation of the human visual system.

In the Lab for Computational Vision, this goes back to Portilla and Simoncelli, 2001, where the authors created a parametric model of textures and synthesized novel images as a way of demonstrating the cases where the model succeeded and failed. In that paper, the model did purport to have anything to do with human vision, and they did not refer to their images as “metamers”, that term did not appear until Freeman and Simoncelli, 2011, where the authors pool the Portilla and Simoncelli texture statistics in windows laid out in a log-polar fashion to generate putative human perceptual metamers.

This notebook demonstrates how to use the Metamer class to generate model metamers.

[1]:

import imageio

import matplotlib.pyplot as plt

import numpy as np

import torch

import plenoptic as po

# so that relative sizes of axes created by po.imshow and others look right

plt.rcParams["figure.dpi"] = 72

# Animation-related settings

plt.rcParams["animation.html"] = "html5"

# use single-threaded ffmpeg for animation writer

plt.rcParams["animation.writer"] = "ffmpeg"

plt.rcParams["animation.ffmpeg_args"] = ["-threads", "1"]

%load_ext autoreload

%autoreload 2

Basic usage

As with all our synthesis methods, we start by grabbing a target image and initalizing our model.

[2]:

img = po.data.curie()

po.imshow(img)

For the model, we’ll use a simple On-Off model of visual neurons

[3]:

model = po.simul.OnOff((7, 7))

po.tools.remove_grad(model)

Like all of our models, when this is called on the image, it returns a 3d or 4d tensor (in this case, 4d). This representation is what the Metamer class will try to match.

[4]:

print(model(img))

tensor([[[[0.6907, 0.6915, 0.6930, ..., 0.6935, 0.6935, 0.6937],

[0.6917, 0.6924, 0.6934, ..., 0.6934, 0.6936, 0.6939],

[0.6935, 0.6937, 0.6938, ..., 0.6933, 0.6939, 0.6942],

...,

[0.6927, 0.6928, 0.6934, ..., 0.6928, 0.6939, 0.6943],

[0.6944, 0.6943, 0.6941, ..., 0.6930, 0.6936, 0.6938],

[0.6950, 0.6948, 0.6942, ..., 0.6929, 0.6932, 0.6933]],

[[0.6957, 0.6949, 0.6933, ..., 0.6928, 0.6928, 0.6925],

[0.6947, 0.6940, 0.6929, ..., 0.6929, 0.6926, 0.6924],

[0.6928, 0.6925, 0.6924, ..., 0.6930, 0.6924, 0.6921],

...,

[0.6936, 0.6935, 0.6929, ..., 0.6935, 0.6924, 0.6920],

[0.6918, 0.6919, 0.6922, ..., 0.6933, 0.6927, 0.6925],

[0.6912, 0.6914, 0.6920, ..., 0.6934, 0.6931, 0.6930]]]])

/home/billbrod/micromamba/envs/plenoptic/lib/python3.10/site-packages/torch/functional.py:504: UserWarning: torch.meshgrid: in an upcoming release, it will be required to pass the indexing argument. (Triggered internally at ../aten/src/ATen/native/TensorShape.cpp:3526.)

return _VF.meshgrid(tensors, **kwargs) # type: ignore[attr-defined]

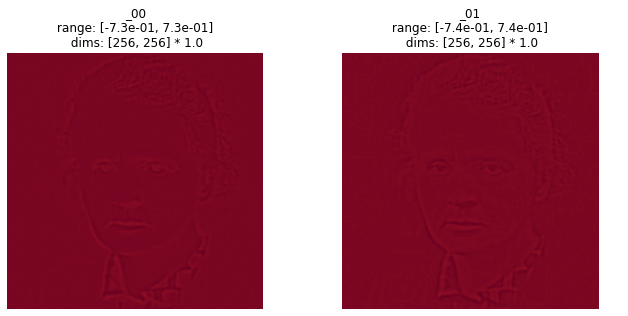

In order to visualize this, we can use the helper function plot_representation (see Display notebook for more details here). In this case, the representation looks like two images, and so we plot it as such:

[5]:

po.tools.display.plot_representation(data=model(img), figsize=(11, 5))

At the simplest, to use Metamer, simply initialize it with the target image and the model, then call .synthesize(). By setting store_progress=True, we update a variety of attributes (all of which start with saved_) on each iteration so we can later examine, for example, the synthesized image over time.

[6]:

met = po.synth.Metamer(img, model)

met.synthesize(store_progress=True, max_iter=50)

/home/billbrod/Documents/plenoptic/src/plenoptic/tools/validate.py:178: UserWarning: model is in training mode, you probably want to call eval() to switch to evaluation mode

warnings.warn(

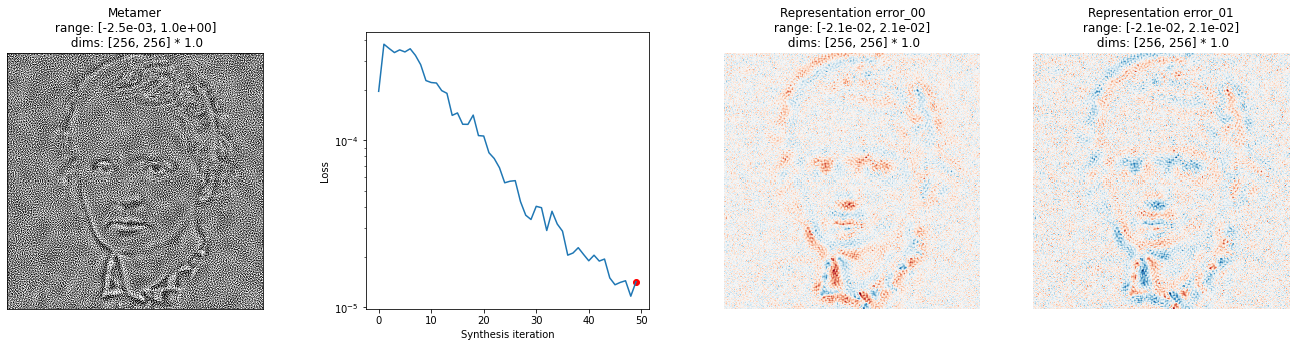

We then call the plot_synthesis_status function to see how things are doing. The image on the left shows the metamer at this moment, while the center plot shows the loss over time, with the red dot pointing out the current loss, and the rightmost plot shows the representation error. If a model has a plot_representation method, this plot can be more informative, but this plot can always be created.

[7]:

# model response error plot has two subplots, so we increase its relative width

po.synth.metamer.plot_synthesis_status(

met, width_ratios={"plot_representation_error": 2}

)

/home/billbrod/Documents/plenoptic/src/plenoptic/tools/display.py:930: UserWarning: ax is not None, so we're ignoring figsize...

warnings.warn("ax is not None, so we're ignoring figsize...")

plot_synthesis_status() is a helper function to show all of this at once, but the individual components can be created separately:

[8]:

fig, axes = plt.subplots(1, 3, figsize=(25, 5), gridspec_kw={"width_ratios": [1, 1, 2]})

po.synth.metamer.display_metamer(met, ax=axes[0])

po.synth.metamer.plot_loss(met, ax=axes[1])

po.synth.metamer.plot_representation_error(met, ax=axes[2])

The loss is decreasing, but clearly there’s much more to go. So let’s continue.

You can resume synthesis as long as you pass the same argument to store_progress on each run (several other arguments, such as optimizer and scheduler, must be None on any run except the first).

Everything that stores the progress of the optimization (loss, saved_model_response, saved_signal) will persist between calls and so potentially get very large.

[9]:

met.synthesize(store_progress=True, max_iter=100)

/home/billbrod/Documents/plenoptic/src/plenoptic/synthesize/metamer.py:195: UserWarning: Loss has converged, stopping synthesis

warnings.warn("Loss has converged, stopping synthesis")

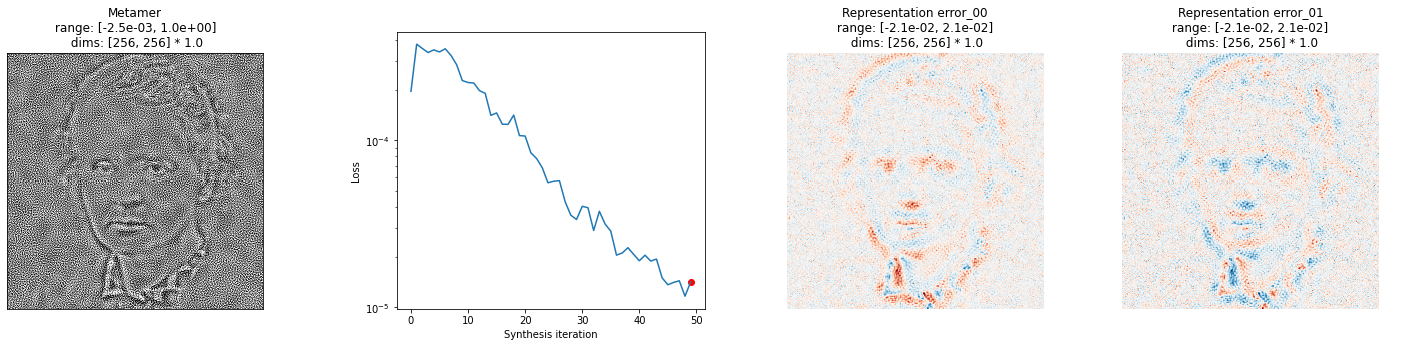

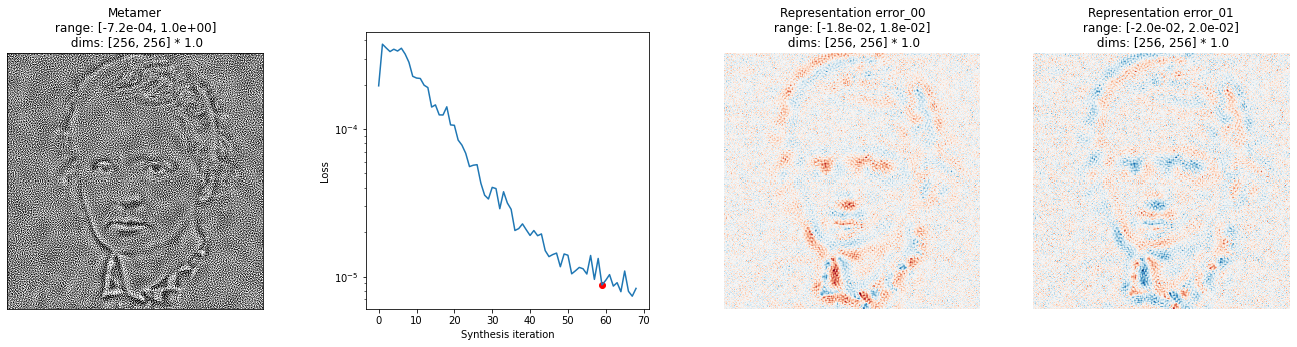

Let’s examine the status again. But instead of looking at the most recent status, let’s look at 10 from the end:

[10]:

po.synth.metamer.plot_synthesis_status(

met, iteration=-10, width_ratios={"plot_representation_error": 2}

)

Since we have the ability to select which iteration to plot (as long as we’ve been storing the information), we can create an animation showing the synthesis over time. This matplotlib.animation object can either be viewed in the notebook (note that this requires the matplotlib configuration options in the first cell of this notebook) or saved as some video format (e.g., anim.save('test.mp4').

[11]:

anim = po.synth.metamer.animate(met, width_ratios={"plot_representation_error": 2})

anim

/home/billbrod/Documents/plenoptic/src/plenoptic/synthesize/metamer.py:1645: UserWarning: Looks like representation is image-like, haven't fully thought out how to best handle rescaling color ranges yet!

warnings.warn("Looks like representation is image-like, haven't fully thought out how"

[11]:

Generally speaking, synthesis will run until you hit max_iter iterations. However, synthesis can also stop if it looks like the loss has stopped changing. This behavior is controlled with the loss_thresh and loss_change_iter arguments: if the loss has changed by less than loss_thresh over the past loss_change_iter iterations, we stop synthesis.

Moving between devices

Metamer has a .to() method for moving the object between devices or dtypes. Call it as you would call any tensor.to and it will move over the necessary attributes.

Saving and loading

Finally, you probably want to save the results of your synthesis. As mentioned above, you can save the synthesis animation, and all of the plots return regular matplotlib Figures and can be manipulated as expected. The synthesized image itself is a tensor and can be detached, converted to a numpy array, and saved (either as an image or array) as you’d expect. po.to_numpy is a convenience function we provide for stuff like this, which detaches the tensor, sends it to the CPU, and converts

it to a numpy array with dtype float32. Note that it doesn’t squeeze the tensor, so you may want to do that yourself.

[12]:

met_image = po.to_numpy(met.metamer).squeeze()

# convert from array to int8 for saving as an image

print(f"Metamer range: ({met_image.min()}, {met_image.max()})")

met_image = po.tools.convert_float_to_int(np.clip(met_image, 0, 1))

imageio.imwrite("test.png", met_image)

Metamer range: (0.00032359999022446573, 1.0005090236663818)

The metamer lies slightly outside the range [0, 1], so we clip before saving as an image. Metamer’s objective function has a quadratic penalty on the synthesized image’s range, and the weight on this penalty can be adjusted by changing the value of range_penalty_lambda at initialization.

You can also save the entire Metamer object. This can be fairly large (depending on how many iterations you ran it for and how frequently you stored progress), but stores all information:

[13]:

met.save("test.pt")

You can then load it back in using the method .load(). Note that you need to first instantiate the Metamer object and then call .load() – it must be instantiated with the same image, model, and loss function in order to load it in!

[14]:

met_copy = po.synth.Metamer(img, model)

# it's modified in place, so this method doesn't return anything

met_copy.load("test.pt")

(met_copy.saved_metamer == met.saved_metamer).all()

/home/billbrod/Documents/plenoptic/src/plenoptic/tools/validate.py:178: UserWarning: model is in training mode, you probably want to call eval() to switch to evaluation mode

warnings.warn(

[14]:

tensor(True)

Because the model itself can be quite large, we do not save it along with the Metamer object. This is why you must initialize it before loading from disk.

Reproducibility

You can set the seed before you call synthesize() for reproducibility by using po.tools.set_seed(). This will set both the pytorch and numpy seeds, but note that we can’t guarantee complete reproducibility: see pytroch docs for some caveats (we currently do not do the stuff described under CuDNN), as well as this issue about resuming state after

saving.

Also note that pytorch does not guarantee identical results between CPU and GPU, even with the same seed.

More Advanced Options

The solution found by the end of the Basic usage section is only one possible metamer. UNTRUE: For this model, it was relatively easy to find a decent metamer (as seen by the relatively low loss and representation error), but that’s not always the case. Optimization in a high-dimensional space with non-linear models is inherently challenging and so we can’t guarantee you’ll find a model metamer, but we do provide some tools / extra functionality to help.

Initialization

By default, the initial_image arg when initializing Metamer is None, in which case we initialize with uniformly-distributed random noise between 0 and 1. If you wish to use some other image for initialization, you can initialize it yourself (it must be the same shape as target_signal) and pass it as the initial_image arg.

Optimization basics

You can set all the various optimization parameters you’d expect. synthesize() has an optimizer arg, which accepts a pytorch optimizer. You can therefore initialize your own optimizer after initializing Metamer like so

[15]:

met = po.synth.Metamer(img, model)

opt = torch.optim.Adam([met.metamer], lr=0.001, amsgrad=True)

met.synthesize(optimizer=opt)

/home/billbrod/Documents/plenoptic/src/plenoptic/synthesize/metamer.py:195: UserWarning: Loss has converged, stopping synthesis

warnings.warn("Loss has converged, stopping synthesis")

synthesize() also accepts a scheduler argument, so that you can pass a pytorch scheduler, which modifies the learning rate during optimization.

Coarse-to-fine optimization

Some models, such as the Portilla-Simoncelli texture statistics, have a multiscale representation of the image, which can complicate the optimization. It’s generally recommended that you normalize the representation (or use a specific loss function) so that the different scales all contribute equally to the representation, but that’s out of the scope of this notebook.

We provide the option to use coarse-to-fine optimization, such that you optimize the different scales separately (starting with the coarsest and then moving progressively finer) and then, at the end, optimizing all of them simultaneously. This was first used in Portilla and Simoncelli, 2000, and can help avoid local optima in image space. Unlike everything else described in this notebook, it will not work for all models. There are two specifications the model must meet:

It must have a

scalesattribute that gives the scales in the order they should be optimized.Its

forward()method must accept ascaleskeyword argument, which accpets a list and causes the model to return only the scale(s) included. SeePortillaSimoncelli.forward()for an example.

We can see that po.simul.PortillaSimoncelli satisfies these constraints, and that the model returns a subset of its output when the scales argument is passed to forward()

[16]:

# we change images to a texture, which the PS model can do a good job capturing

img = po.data.reptile_skin()

ps = po.simul.PortillaSimoncelli(img.shape[-2:])

print(ps.scales)

print(ps.forward(img).shape)

print(ps.forward(img, scales=[0]).shape)

['pixel_statistics', 'residual_lowpass', 3, 2, 1, 0, 'residual_highpass']

torch.Size([1, 1, 1046])

torch.Size([1, 1, 261])

There are two choices for how to handle coarse-to-fine optimization: 'together' or 'separate'. In 'together' (recommended), we start with the coarsest scale and then gradually add each finer scale (thi sis like blurring the objective function and then gradually adding details). In 'separate', we compute the gradient with respect to each scale separately (ignoring the others), then with respect to all of them at the end.

If our model meets the above requirements, then we can use the MetamerCTF class, which uses this coarse-to-fine procedure. We specify which of the two above options are used during initialization, and it will work through the scales as described above (and will resume correctly if you resume synthesis). Note that this will take a while, as it has to go through each scale. Also note that the progress bar now specifies which scale we’re on.

[17]:

met = po.synth.MetamerCTF(

img, ps, loss_function=po.tools.optim.l2_norm, coarse_to_fine="together"

)

met.synthesize(store_progress=True, max_iter=100)

# we don't show our synthesized image here, because it hasn't gone through all the

# scales, and so hasn't finished synthesizing

/home/billbrod/Documents/plenoptic/src/plenoptic/tools/validate.py:211: UserWarning: Validating whether model can work with coarse-to-fine synthesis -- this can take a while!

warnings.warn("Validating whether model can work with coarse-to-fine synthesis -- this can take a while!")

In order to control when synthesis considers a scale to be “done” and move on to the next one, you can set two arguments: change_scale_criterion and ctf_iters_to_check. If the scale-specific loss (current_scale_loss in the progress bar above) has changed by less than change_scale_criterion over the past ctf_iters_to_check iterations, we consider that scale to have reached a local optimum and move on to the next. You can also set change_scale_criterion=None, in which case

we always shift scales after ctf_iters_to_check iterations

[18]:

# initialize with some noise that is approximately mean-matched and with low variance

im_init = torch.rand_like(img) * 0.1 + img.mean()

met = po.synth.MetamerCTF(

img,

ps,

loss_function=po.tools.optim.l2_norm,

initial_image=im_init,

coarse_to_fine="together",

)

met.synthesize(

store_progress=10,

max_iter=500,

change_scale_criterion=None,

ctf_iters_to_check=7,

)

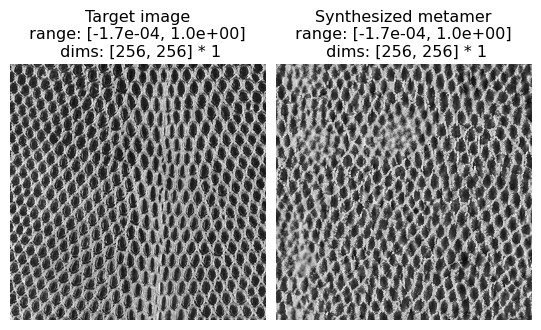

po.imshow(

[met.image, met.metamer],

title=["Target image", "Synthesized metamer"],

vrange="auto1",

);

And we can see these shfits happening in the animation of synthesis:

[19]:

po.synth.metamer.animate(met)

/home/billbrod/Documents/plenoptic/src/plenoptic/tools/display.py:930: UserWarning: ax is not None, so we're ignoring figsize...

warnings.warn("ax is not None, so we're ignoring figsize...")

[19]:

MetamerCTF has several attributes which are used in the course of coarse-to-fine synthesis:

scales_loss: this list contains the scale-specific loss at each iteration (that is, the loss computed on just the scale(s) we’re optimizing on that iteration; which we use to determine when to switch scales).scales: this is a list of the scales in optimization order (i.e., from coarse to fine). The last entry will be'all'(since after we’ve optimized each individual scale, we move on to optimizing all at once). This attribute will be modified by the synthesize() method and is used to track which scale we’re currently optimizing (the first one). When we’ve gone through all the scales present, this will just contain a single value:'all'.scales_timing: this is a dictionary whose keys are the values of scales. The values are lists, with 0 through 2 entries: the first entry is the iteration where we started optimizing this scale, the second is when we stopped (thus if it’s an empty list, we haven’t started optimzing it yet).scales_finished: this is a list of the scales that we’ve finished optimizing (in the order we’ve finished). The union of this andscaleswill be the same asmetamer.model.scales.

A small wrinkle: if coarse_to_fine=='together', then none of these will ever contain the final, finest scale, since that is equivalent to 'all'.